HGC #1: Hand Gesture Control - Overview

| GitHub | DrDEXT3R/HandGestureControl |

|---|---|

| Part 2 | Create Dataset |

Introduction

Nowadays, technological progress boils down to making life easier for people in every aspect. The devices perform more tasks for us, while offering a number of new functionalities. One of them, more and more commonly encountered, is interaction with the equipment without the use of standard remote controls or items intended for this. Instead, built-in microphones and cameras are used to provide control commands.

The advantages of speech control can be seen in everyday life. Making a phone call using voice while preparing breakfast, or checking the weather for the rest of the day while choosing outfit are just some of them. At the same time, it also has its consequences. We limit ourselves to using devices that do not make any sounds when speaking the command. We can imagine turning to a TV or an audio amplifier in order to increase the volume, but we must remember that their work is also caught by the microphone. In such cases, complicated algorithms are needed that will be able to distinguish between overlapping voices.

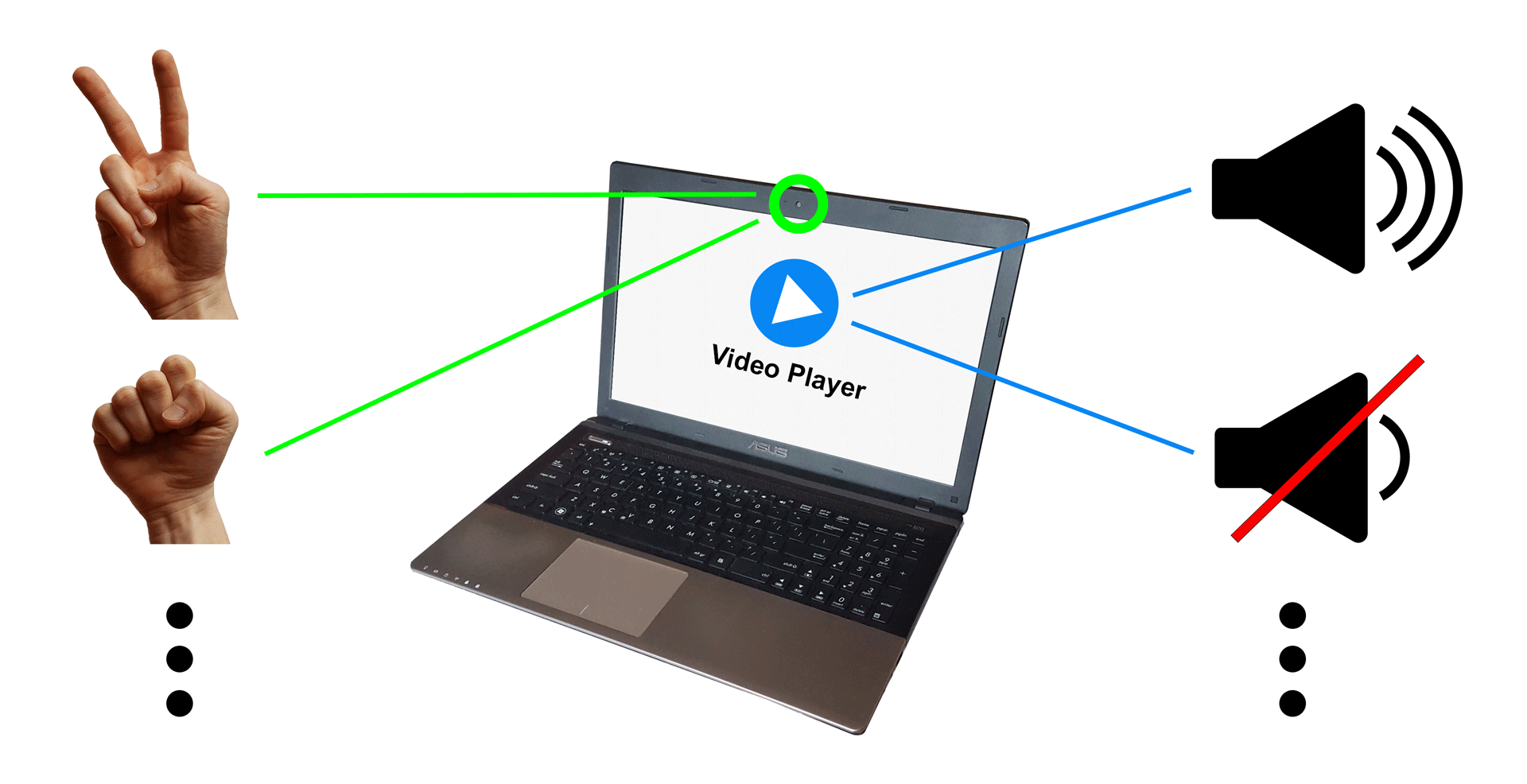

A good solution for such problems is the use of hand gesture recognition. Specific combinations of finger positions will allow sending commands quietly and controlling a device at the same time.

Aim of Project

The goal is to create a program that will be able to recognize hand gestures on an ongoing basis, shown to an average webcam. To visualize the correctness of the project’s work, it was decided that application would run on a computer and the gestures performed would control video player.

Division of Labor

The work will be divided into 3 stages:

- Creating a dataset.

This part will consist of writing a program to capture the image frame from the webcam at the right time, transform into a specific form and give it the appropriate class - a kind of hand gesture. - Creating a neural network.

This stage will be based on modeling and learning a neural network, consisting of convolutional layers, which will be able to recognize the kind of hand gesture. - Creating a computer control program.

This part will be composed of writing a program to capture the image frame, recognize the hand gesture (using the previously learned neural network) and on this basis will force the computer to perform the appropriate action.

Workplace

In my case, the entire project along with learning the neural network will be implemented on a laptop with the Ubuntu operating system installed. This computer has:

Hardware

- 0.3 MP webcam

- Intel Core i7-3610QM

- NVIDIA GeForce GT 630M

- 8 GB RAM

Software

- Python 3 - The programming language

- TensorFlow - A end-to-end open source platform for machine learning

- Keras - A high-level neural networks API written in Python

- OpenCV - Computer vision and machine learning software library

- NumPy - The package for scientific computing with Python

- OpenBLAS - An optimized BLAS library (Basic Linear Algebra Subprogram)

- pynput - This library allows control and monitor input devices

- h5py - The package is a Pythonic interface to the HDF5 binary data format

- os - This module provides a portable way of using operating system dependent functionality

Prerequisites

Install before starting work:

- VLC media player

- Python 3

sudo apt-get update sudo apt-get upgrade sudo apt-get install python3.6 sudo apt-get install python3-pip - All necessary dependencies (download)

pip3 install -r requirements.txt - BLAS library

sudo apt-get install build-essential cmake git unzip pkg-config libopenblas-dev liblapack-dev - HDF5 library

sudo apt-get install libhdf5-serial-dev python3-h5py

In addition, you can install CUDA drivers and the cuDNN toolkit. Remember that your graphics card must support CUDA.

Leave a comment